-

Continue reading →: Podcast – GirlsTalkCyber – Episode 24

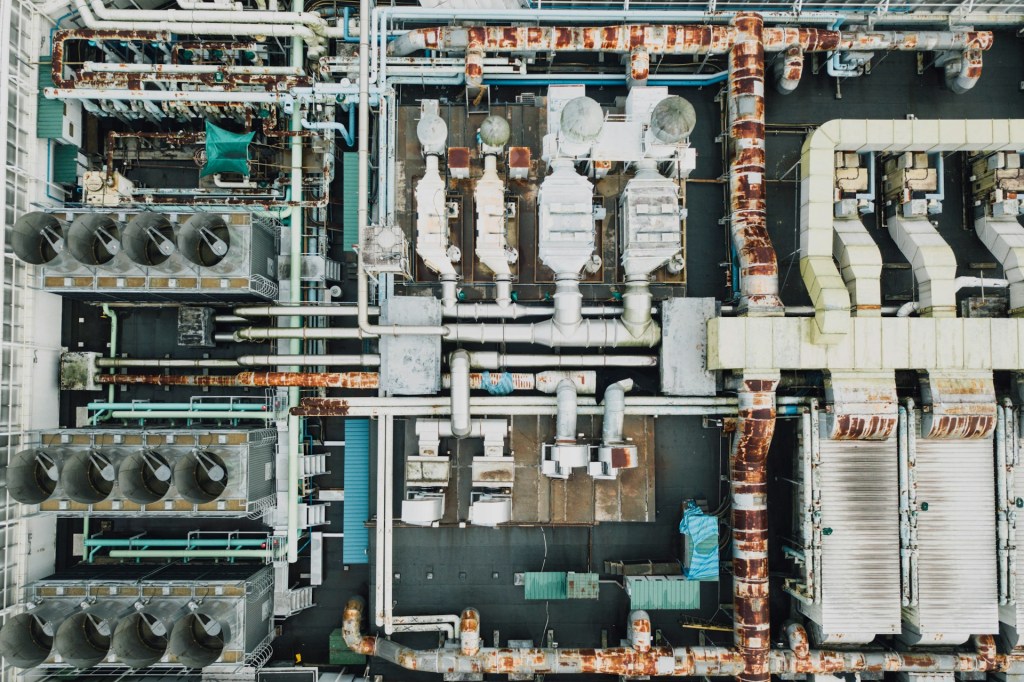

Continue reading →: Podcast – GirlsTalkCyber – Episode 24I spoke to the GirlsTalkCyber podcast about understanding and being aware of threats against critical infrastructure. We talked about things you should think about as geopolitical, economic, and climate instability increase across the world and how that relates to cyber threats. https://girlstalkcyber.com/24-what-happens-if-hackers-poison-the-water-interview-with-lesley-carhart/

-

Continue reading →: Smashing Security – 449: How to scam someone in seven days

Continue reading →: Smashing Security – 449: How to scam someone in seven daysI am so excited to be on Smashing Security! Such a huge pleasure to finally make it onto one my favorite podcasts of all time with Graham Cluley! While I spoke about the jobs market and what students and hiring managers should be doing about it, Graham told me that…

-

Continue reading →: My Top 5 Recommendations on OT Cybersecurity Student Upskilling

Continue reading →: My Top 5 Recommendations on OT Cybersecurity Student UpskillingI get asked about where to start learning OT cybersecurity as a student a lot. I fully realize that attention spans are short and people are busy, so without further ado let’s get to my top five recommendations: I hope this gives you a few more ideas! Happy new year!

-

Continue reading →: Destination Cyber Podcast on OT

Continue reading →: Destination Cyber Podcast on OTPlease see my recent podcast on OT foundations and current events with Destination Cyber from KBI.FM!

-

Continue reading →: Reasonable Expectations for Cybersecurity Mentees

Continue reading →: Reasonable Expectations for Cybersecurity MenteesMost of my audience is on the more senior end of the career spectrum. As a result, a lot of my writing about careers is aimed at senior cybersecurity professionals, encouraging managers and experienced practitioners to support the next generation. But that doesn’t mean newcomers are free from responsibility in…

-

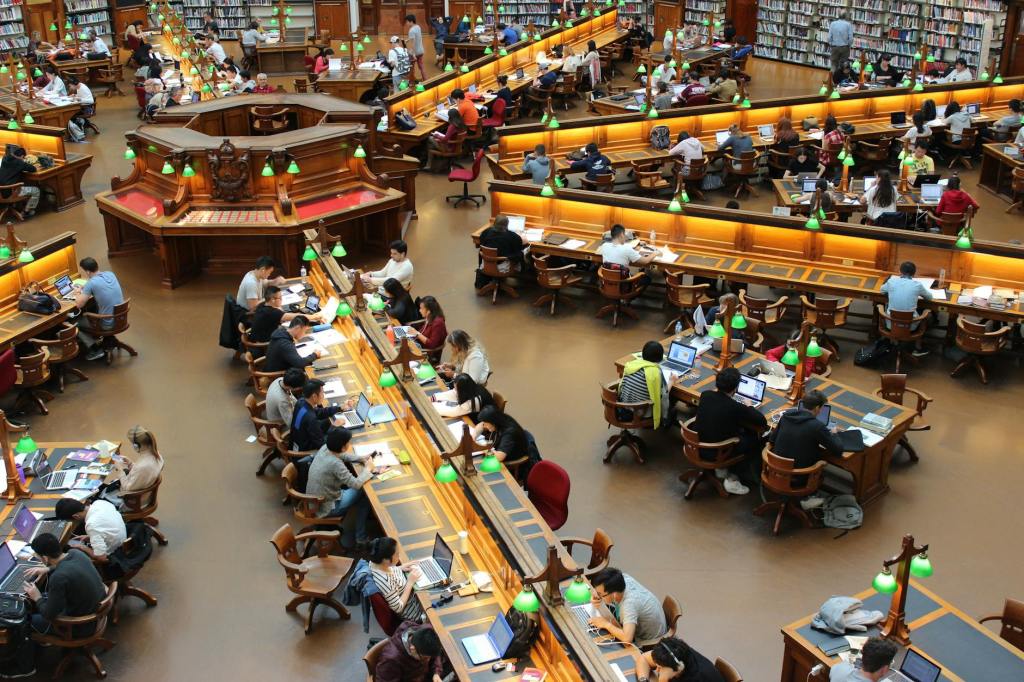

Continue reading →: The Top 10 Things I’d Like to See in University OT Cybersecurity Curriculum (2025 Edition)

Continue reading →: The Top 10 Things I’d Like to See in University OT Cybersecurity Curriculum (2025 Edition)Most of you who have been following me for a while know that I have a very strange and unusual job in cybersecurity. I’m one of maybe a hundred or so people on earth who does full time incident response and forensics for industrial devices and networks that are hacked.…

-

Continue reading →: Open Online Mentoring Guide

Continue reading →: Open Online Mentoring GuideI’ve had a sign up for open online career mentoring on my site for quite a number of years now (in addition to running similar career clinics in-person). As I’ve gotten more and more traction internationally on the program, a lot of senior folks have asked how to set up…

-

Continue reading →: Stories Ink Interviewed Me, and I love Stories.

I was recently at the Tech Leaders Summit in Hunter Valley and the imitable Jennifer O’Brien covered my backstory and how I got into the odd space of Operational Technology. This is a nice change of format for people who aren’t into podcasts and she tells such a good narrative.…

-

Continue reading →: The National Cryptologic Foundation Podcast

Continue reading →: The National Cryptologic Foundation PodcastIt was a real honor to appear on the official podcast of the National Cryptologic Foundation, “Cyber Pulse”. They interview a wide range of intriguing personalities working in the cyber and cryptography space, and asked me a broad range of challenging questions about everything from performing forensics on national critical…

-

Continue reading →: I’m in Melbourne, and PancakesCon 6 is On!

Hello all! It’s my pleasure to announce I’m settled enough to operate my free educational conference for the 6th year. It will be a bit late this year, on September 21st. I invite you to check out the website at https://www.pancakescon.com as well as our associated socials, where you can…

Hello,

I’m Lesley, aka Hacks4Pancakes

Nice to meet you. I’m a long-time digital forensics and incident response professional, specializing in industrial control and critical infrastructure environments. I teach, lecture, speak, and write about cybersecurity.

I’m from Chicago, living in Melbourne.

Follow Me on Social!

ai career careers certification cfp challenge coins conferences cybersecurity cybersecurity careers dfir digital forensics education featured ff giac hacking health and wellness ics incident response information security infosec infosec education iot management mastodon mentoring nation state attacks off topic osint phishing podcast privacy security security education security operations self study social media talks technology threat attribution threat intelligence university video volatility women in tech

Recent posts

Join the fun!

Stay up-to-date with my recent posts, podcasts, and blogs!